-

What is a Number, That a Large Language Model May Know It?

Raja Marjieh, Veniamin Veselovsky, Thomas L. Griffiths, Ilia Sucholutsky (2025) -

Metaphors We Live By

George Lakoff, Mark Johnson (1981) -

Being there: Putting Brain, Body, and World Together Again

Andy Clark (1997) -

Solaris

Stanislaw Lem (1961)

Abstract

As Large Language Models (LLMs) continue to advance, they exhibit certain cognitive patterns similar to those of humans that are not directly specified in training data. This study investigates this phenomenon by focusing on temporal cognition in LLMs. Leveraging the similarity judgment task, we find that larger models spontaneously establish a subjective temporal reference point and adhere to the Weber-Fechner law, whereby the perceived distance logarithmically compresses as years recede from this reference point. To uncover the mechanisms behind this behavior, we conducted multiple analyses across neuronal, representational, and informational levels. We first identify a set of temporal-preferential neurons and find that this group exhibits minimal activation at the subjective reference point and implements a logarithmic coding scheme convergently found in biological systems. Probing representations of years reveals a hierarchical construction process, where years evolve from basic numerical values in shallow layers to abstract temporal orientation in deep layers. Finally, using pre-trained embedding models, we found that the training corpus itself possesses an inherent, non-linear temporal structure, which provides the raw material for the model's internal construction. In discussion, we propose an experientialist perspective for understanding these findings, where the LLMs' cognition is viewed as a subjective construction of the external world by its internal representational system. This nuanced perspective implies the potential emergence of alien cognitive frameworks that humans cannot intuitively predict, pointing toward a direction for AI alignment that focuses on guiding internal constructions.

What Did We Find?

Utilizing a paradigm from cognitive science, similarity judgement task, we found that larger models not only spontaneously establish a subjective temporal reference point but also that their perception of temporal distance adheres to the Weber-Fechner law, a psychophysical law found in human brain.

How Does It Happen?

We investigated the underlying mechanisms of this human-like cognitive pattern in LLMs through a multi-level analysis across neuronal, representational, and informational aspects.

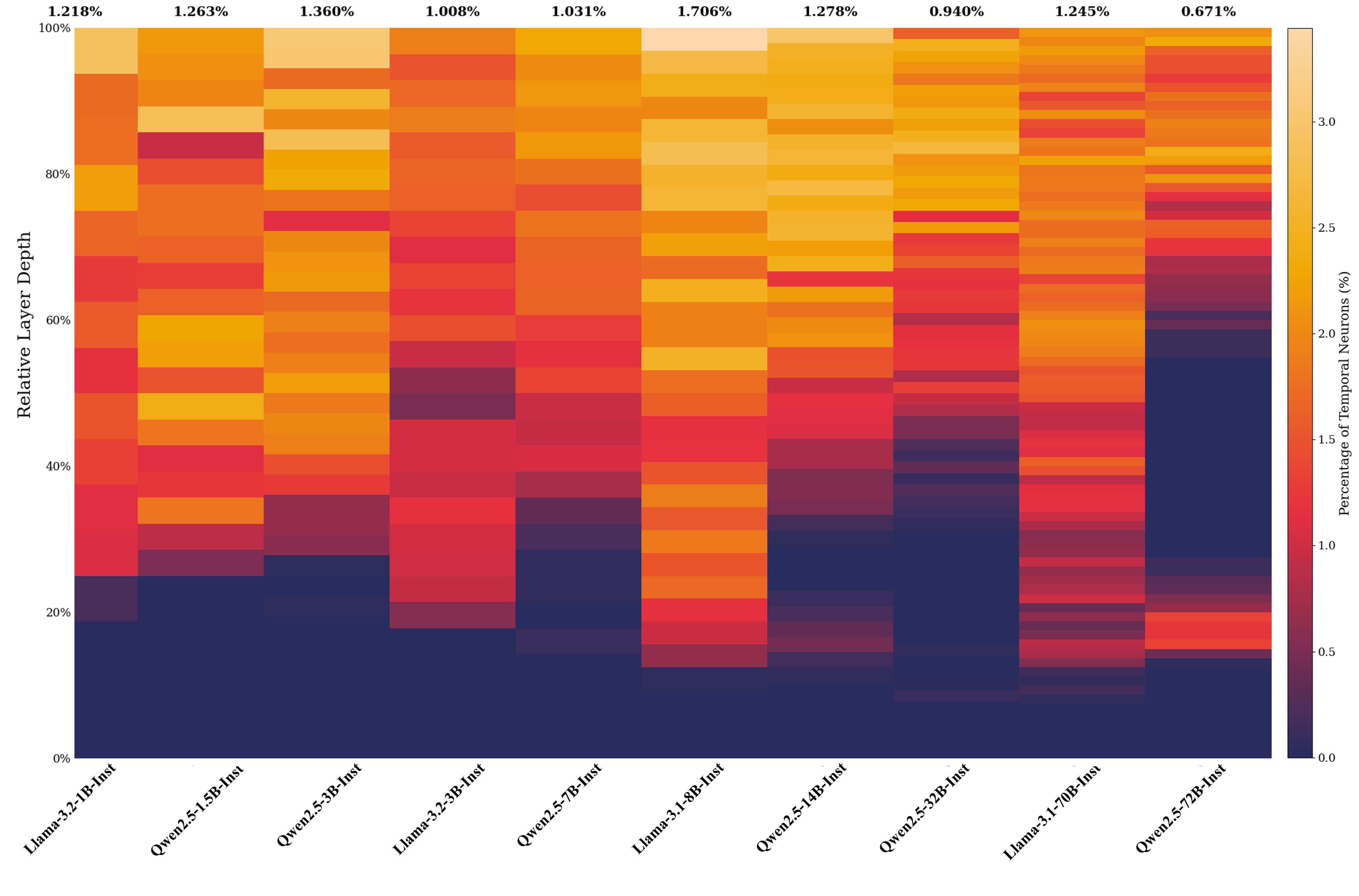

Level 1: Neural Coding - finding temporal-preferential neurons

This figure shows the distribution of temporal-preferential neurons across model layers. We identified these neurons by their consistently stronger activation to inputs formatted as "Year: x-x-x-x" compared to the control condition "Number: x-x-x-x". These specialized neurons represent a small fraction of the total, typically ranging from 0.67% to 1.71%, and are mostly distributed in the middle-to-late layers of the network.

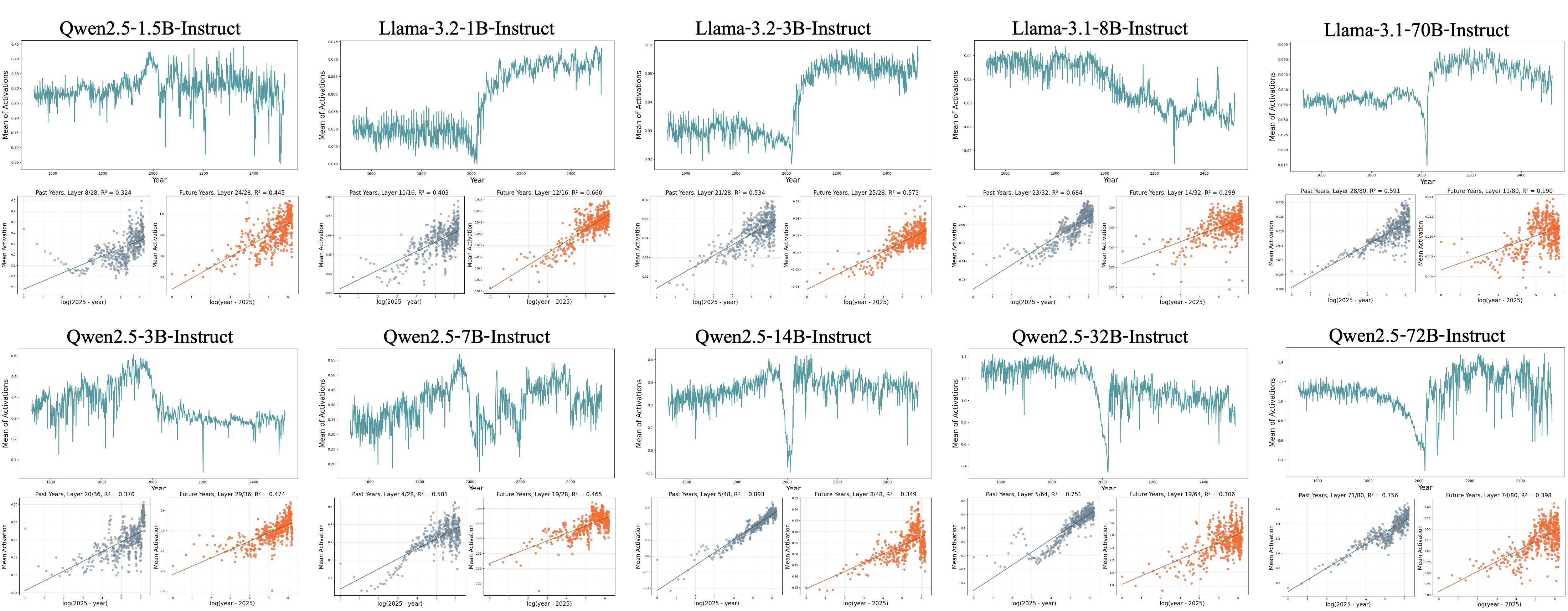

Level 1: Neural Coding - Logarithmic Compression (Weber-Fechner Law)

The upper panels show the mean activation of the top 1000 temporal-preferential neurons for each year from 1525 to 2524. In larger models, a distinct trough appears, with activation increasing in a logarithmic-like compression pattern as years recede from this point. This is consistent with the neural basis of the Weber-Fechner law found in the human brain, likely a convergent solution for efficient information coding.

The bottom panels show a layer-wise regression analysis of neuron activations against the logarithmic distance from a subjective reference point. The results indicate that neurons across all the models exhibit this logarithmic encoding scheme to some extent.

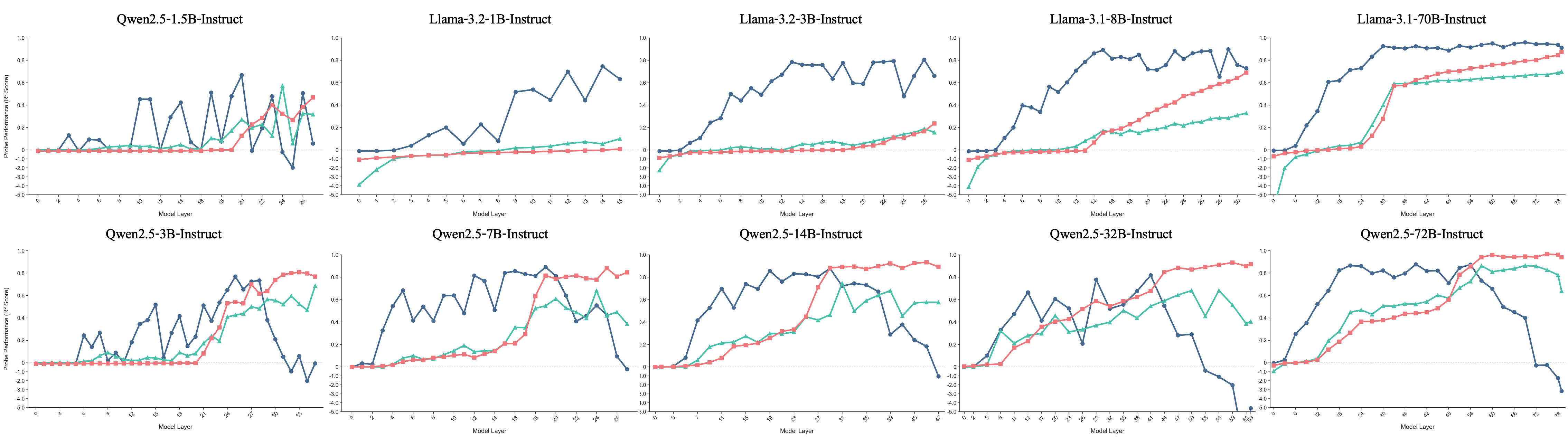

Level 2: Representational Structure - Evolving a Timeline

We extracted residual stream representations from each model layer during the similarity judgment task to analyze their evolving patterns. We then regressed these representations against three theoretical distances: the Log-Linear distance (circle, numerical features), the Levenshtein distance (triangle, string features), and the Reference-Log-Linear distance (square, subjective timeline with Weber's law).

Results show that in larger models, a 'year' is progressively transformed from a numerical feature in early layers to a temporal value in deeper layers. In Qwen models, this involves an active suppression of the initial numerical representation as the temporal one emerges.

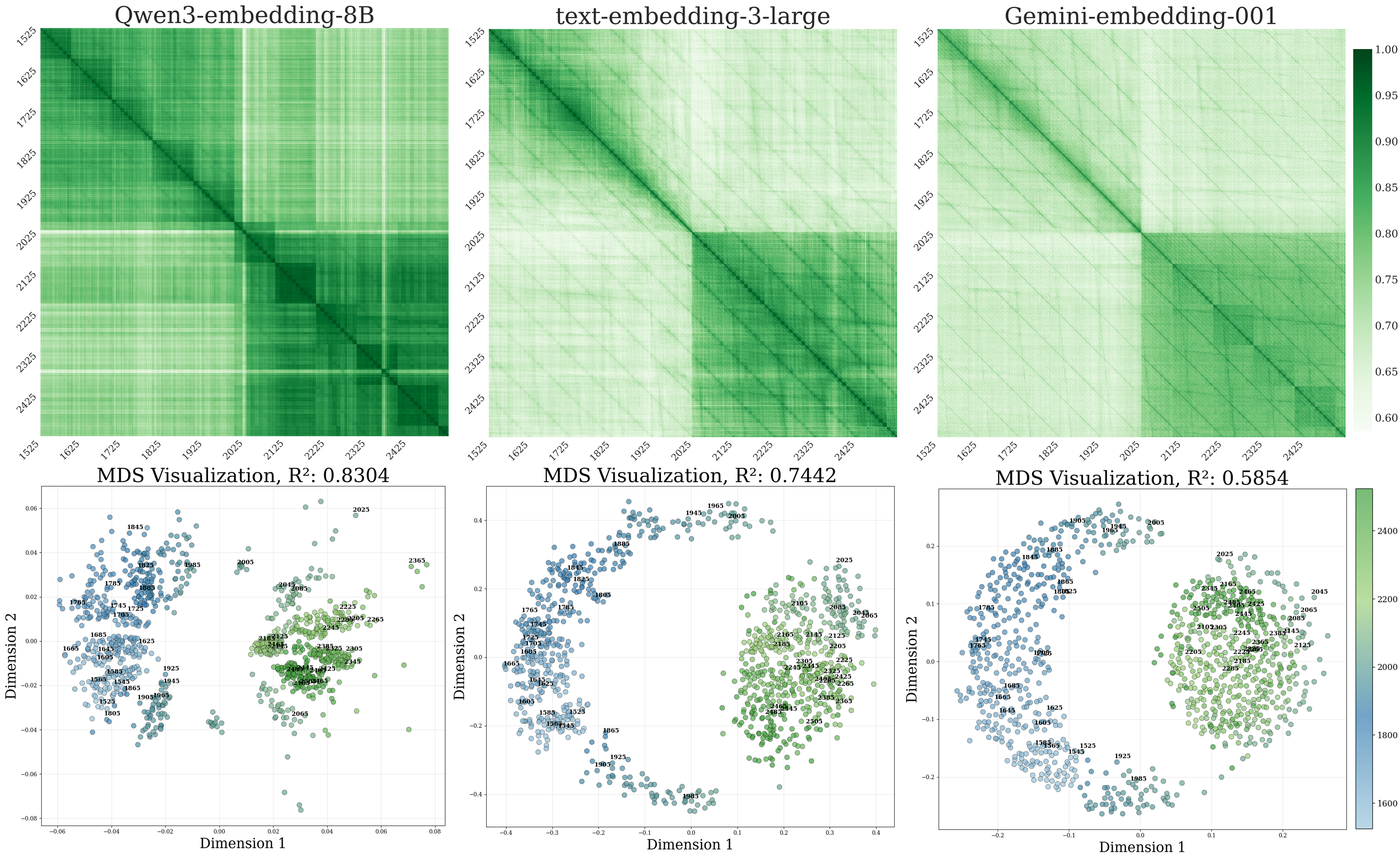

Level 3: Information Exposure - The Blueprint in the Data

We used three independent, pre-trained embedding models to test if this pattern pre-exists in the training data. We computed the pairwise cosine similarity of embeddings for each "Year: x-x-x-x" from 1525 to 2524.

The results revealed a similar, non-linear temporal structure inherent in the data corpora. In particular, future years show higher similarity to each other, which is likely due to their sparse information richness in the training data. This suggests the training corpora provide the raw material for the temporal cognition we observe in LLMs.

How To Understand These Results?

Our work establishes an experientialist perspective to understand these findings. We propose that Large Language Models (LLMs) do not merely reorganize training data, but actively construct a subjective model of the world from their informational 'experience'. This viewpoint helps us move beyond seeing LLMs as either simple statistical engines or human-like minds. While they exhibit human-like cognitive patterns, they possess fundamentally different architectures and learn from a static, disembodied world of text. Therefore, the most significant risk may not be that LLMs become too human, but that they develop powerful yet alien cognitive frameworks we cannot intuitively anticipate.

This perspective has profound implications for AI alignment. Traditional approaches focus on controlling a model’s external behavior, but the experientialist view suggests that robust alignment requires engaging directly with the formative process by which a model builds its internal world. The goal must shift from simply trying to make AI safe through external constraints to make safe AI from the ground up—systems whose emergent cognitive patterns are inherently aligned with human values. This calls for multi-level efforts, from monitoring a model's internal representations to carefully curating the environments it learns from.

Cite Our Work 🖤

@misc{li2025mindlanguagemodelsexhibit,

title={The Other Mind: How Language Models Exhibit Human Temporal Cognition},

author={Lingyu Li and Yang Yao and Yixu Wang and Chubo Li and Yan Teng and Yingchun Wang},

year={2025},

eprint={2507.15851},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2507.15851},

}